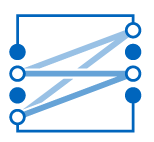

We present an information-theoretic cost function for co-clustering, i.e., for simultaneous clustering of two sets based on similarities between their elements. By constructing a simple random walk on the corresponding bipartite graph, our cost function is derived from a recently proposed generalized framework for information-theoretic Markov chain aggregation. The goal of our cost function is to minimize relevant information loss, hence it connects to the information bottleneck formalism. Moreover, via the connection to Markov aggregation, our cost function is not ad hoc, but inherits its justification from the operational qualities associated with the corresponding Markov aggregation problem. We furthermore show that, for appropriate parameter settings, our cost function is identical to well-known approaches from the literature, such as Information-Theoretic Co-Clustering of Dhillon et al. Hence, understanding the influence of this parameter admits a deeper understanding of the relationship between previously proposed information-theoretic cost functions. We highlight some strengths and weaknesses of the cost function for different parameters. We also illustrate the performance of our cost function, optimized with a simple sequential heuristic, on several synthetic and real-world data sets, including the Newsgroup20 and the MovieLens100k data sets.

«

We present an information-theoretic cost function for co-clustering, i.e., for simultaneous clustering of two sets based on similarities between their elements. By constructing a simple random walk on the corresponding bipartite graph, our cost function is derived from a recently proposed generalized framework for information-theoretic Markov chain aggregation. The goal of our cost function is to minimize relevant information loss, hence it connects to the information bottleneck formalism. Moreo...

»